Real-Time ASoC: Selected results from the project

This page summarizes the main outcomes of this project. It represents an attempt to show a comprehensive overview of the whole project driven by the published works. Please, refer to the specific project pages to know more details about the different works. There are links to the publications, the codes, the citations, and others such as supplementary material, project pages, or videos. Refer, to complete list of publications, to know more about the project.

|

F. Barranco, C. L.

Teo, C.

Fermuller, Y. Aloimonos, "Contour

detection and characterization for asynchronous event sensors,"

International

Conference on Computer Vision, ICCV , 2015. [PDF]

[BibTex]

[Project page and code]

[Spotlight video]

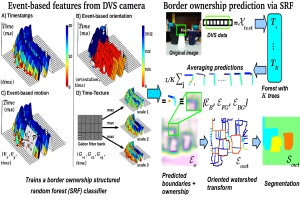

This paper represents the first work that uses the DVS sensor to extract contours and boundary ownership only from event information. The bio-inspired, asynchronous event-based dynamic vision sensor records temporal changes in the luminance of the scene at high temporal resolution. Since events are only triggered at significant luminance changes, most events occur at the boundary of objects. The detection of these contours is an essential step for further processing. This work presents an approach that learns the location of contours and their border ownership using Structured Random Forests on event-based features: motion, timing, texture, and spatial orientations. Finally, the contour detection and boundary assignment are demonstrated in a proto-segmentation of the scene. |

|

F. Barranco, C.

Fermuller, Y. Aloimonos, "Contour

motion estimation for asynchronous event-driven cameras," Proceedings of the IEEE, 102

(10), 1537-1556, 2014. [PDF]

[BibTex]

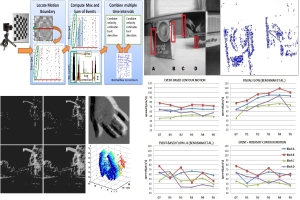

This work presented algorithms for estimating image motion from asynchronous event-based information. Conventional image motion estimation cannot handle depth discontinuities well, while the methods proposed here provide accurate estimates on contours. First, a technique that uses only DVS data is presented. It reconstructs the contrast at contours from the events and tracks the contour points over time. The accuracy of the technique is due to the use of exact timestamps, only possible because of DVS. The second technique uses a combination of event-based motion information and intensity information. It computes contrast from the intensity, and temporal derivatives from the event-base motion information. The keypoint is that rather than considering event-based computations, they could be looked at as a way to overcome the major issues that conventional computer vision is trying to solve. Since the conventional strategies are becoming exhausted, this new idea of integrating frame-based and event-based mechanisms could be a viable alternative. Through integration, we can achieve a great reduction in the use of computational resources and an increase in performance |

|

F. Barranco, J.

Diaz, B. Pino, E. Ros, "Real-time

visual saliency architecture for FPGA with top-down attention modulation,"

IEEE Trans. on

Industrial Informatics, 10

(3), 1726-1735, 2014. [PDF]

[BibTex]

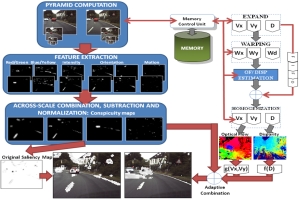

[Code] This project presents an FPGA architecture for the computation of visual attention based on the combination of a bottom-up saliency and a top-down task-dependent modulation stream. The bottom-up stream is deployed including optical flow, local energy, red-green and blue-yellow color opponencies, and different local rientation maps. The final saliency is modulated by two highlevel features: optical flow and disparity. The architecture include some feedback masks to adapt the weights of the features that are part of the bottom-up stream, depending on the specific target application. The target applications are ADAS (Advanced Driving Assistance Systems), video surveillance, robotics, etc... |

|

F. Barranco, C. Fermuller, Y. Aloimonos, T. Delbruck, "A dataset for visual navigation with neuromorphic methods,"

Frontiers in Neuroscience: Neuromorphic Engineering, 10, 2016. [PDF]

[BibTex]

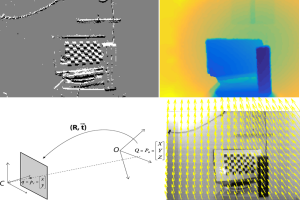

[Project page and code] Standardized benchmarks for Computer Vision provide very powerful tools for the development of new improved techniques in the field. Currently frame-free event-driven vision still lacks datasets to assess the accuracy of their methods. This dataset provides both frame-free event data and classic image, motion and depth data to assess different event-based methods and compare them to frame-based conventional Computer Vision. We hope that this will help researchers understand the potential of the new technology of event-based vision. Event-based sensors and frame-based cameras record very different kinds of data streams. Frame-free sensors collect events that are triggered due to changes in the luminance while conventional sensors collect the luminance of the scene. If we want to compare methods for heterogeneous sensors, we will need conventional sensors and frame-free sensors collecting events from the same scene. |

Other publications

- F. Barranco, C. Fermüller, Y. Aloimonos, "Estimación de movimiento propio y flujo usando el movimiento de las fronteras", Jornadas de Computación Empotrada, Valladolid, Spain.

- C. Fermuller, F. Wang, Y. Yang, K. Zampogiannis, Y. Zhang, F. Barranco, M. Pfeiffer, "Prediction of manipulation actions", International Journal of Computer Vision (second review), 2016.

- F. Barranco, C. Fermuller, Y. Aloimonos, "Bio-inspired Motion Estimation with Event-Driven Sensors", Advances in Computational Intelligence, 2015, IWANN 2015, (Palma de Mallorca, Spain).

- F. Barranco, C. Fermuller, Y. Aloimonos, "Asynchronous event-driven cues for border detection and segmentation", Demo Session: Advances in Computational Intelligence, 2015, IWANN 2015.